The Isle of Wight Pension Fund has implemented an improvement plan in relation to approximately 1,500 records containing “significantly problematic” conditional data, as it grapples with longstanding issues over member information.

According to a recent Pensions Regulator survey of administration in public sector pension plans, including local government schemes, 15 per cent of schemes did not have adequate processes in place in 2017 for monitoring data accuracy and completeness, up from 11 per cent in 2016.

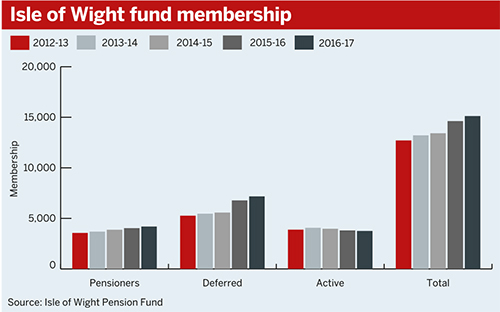

The c.£562.8m Isle of Wight fund has devised a three-pronged strategy to overcome its data woes in time for its 2019 actuarial valuation.

You might see a 5-10 per cent premium on your buyout policy if your data isn’t accurate

Fergus Clarke, Pasa

Minutes from a pension board meeting that took place on February 27 2018 indicate that the fund agreed to a one-off project to outsource the cleansing of its pre-March 31 2017 data through the national Local Government Pension Scheme procurement framework.

It was agreed that data from April 2017 would be cleansed using a “manual data validation spreadsheet” designed by the scheme’s administrative team.

New data being entered into the system will be uploaded via the i-Connect data portal. A data improvement officer was appointed in mid-May, according to a spokesperson for the Isle of Wight Council.

The improvement plan covers the scheme’s data quality, improvements to its website and guaranteed minimum pension reconciliation.

According to the minutes, “the [GMP] data which tended to be poor was the scheme specific data such as the salary posting information and status”.

The minutes state that “poor data quality had been noted as an issue for a number of years”.

The spokesperson said: “These issues have arisen through receipt of poor quality and incomplete data from employers’ external payroll providers. Examples of the data missing would be leaver forms and information and changes in job role.”

Search underway for outsourced solution

The scheme has purchased a “templated website solution” from consultancy Hymans Robertson, “which would allow fund-specific pages to be added”, according to the minutes.

The search for an outsourced solution to issues with the scheme’s data prior to its last valuation is now underway.

“A request for further competition, using Lot 2 of the national LGPS framework for third-party administration services, was issued via the southern business portal on May 25 2018,” the spokesperson said, and added that: “It is expected that the award will be made at the end of June 2018.”

Conditional data refers to the data items that are key to running the scheme and meeting its legal obligations, such as employment records including salary, contribution history and estimated pension value.

Common data is the basic member data used to identify scheme members, such as name and date of birth.

Garry Wake, managing director at administration company Trafalgar House, said trustees should not assume a direct correlation between the quality of common and scheme specific data sets.

“Trustees can spend a long time looking at common data and improving the quality of contact information,” he said, but “are often surprised when they undertake more detailed scheme specific checks and find that the underlying liabilities they are basing their valuation and member calculations on is incorrect”.

Fergus Clarke, executive director at the Pensions Administration Standards Association, stressed the importance of competent scheme administration in the run-up to events such as valuations. Corporate schemes looking to undertake risk transfers can also be stung by bad data, he said.

“Typically you might see a 5-10 per cent premium on your buyout policy if your data isn’t accurate, and the buyout provider has to go and do that data-clean programme at that time,” Clarke said.

Older data will prove more problematic

In May, the regulator announced it would be getting tougher on public sector schemes that fail to demonstrate adequate levels of administrative competency.

Vanessa Burke, data solutions director at consultancy Willis Towers Watson, noted the technological difficulties faced by schemes trying to process older data.

Regulator threatens greater discipline over poor admin

The number of public service pension schemes disclosing inadequate processes for monitoring data accuracy and completeness has risen, as the Pensions Regulator pledges to clamp down on those falling below the standards it expects.

Public service schemes are in the midst of a GMP reconciliation process that demands the management of swaths of old member information, but bad data can hinder funds’ efforts.

“Much of the older data held by schemes pre-dates computerisation,” Burke said. “Many schemes have a reliance on older paper files or scanned copies of these for their members and may have been through several mergers or changes in administration over the years, making identifying and using that older data more challenging,” she added.